This algorithm wants to find who is naked on the Internet

This is how its nudity algorithm was born. As for the website? Think of it less as a parlor trick and more as a marketing tool. If sex sells, maybe filtering it will too.

“We thought there would be potentially much broader applicability,” says Daniel. “Anyone trying to run a community but wants to filter objectionable content or keep it kid-friendly could benefit from this same algorithm. » Given the nature of the content, Algorithmia does not store or view your images; the site simply calls the nudity detection algorithm and returns a result.

In doing so, contributions to isitnude.com help refine its detection prowess. However, this progress will be fragmentary; Privacy standards that prevent Algorithmia from viewing your photos also mean that potentially useful results must be reported through other channels. “People sent us emails, tweets, telling us that a photo didn’t really work,” Daniel explains. “We used it to iterate. »

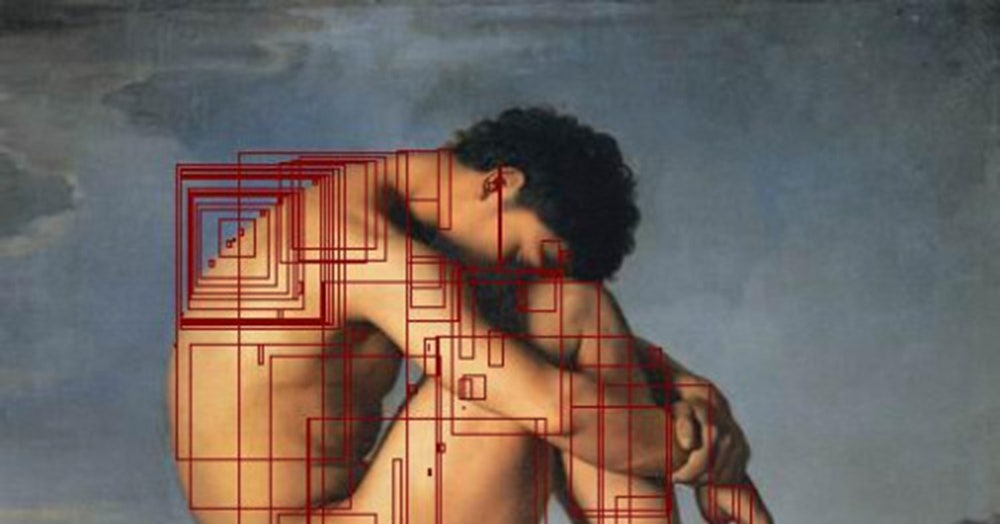

It will also take more than a simple tweak for the algorithm to become foolproof, even slightly tanned. For example, it can't yet recognize individual body parts, meaning an image of someone who is fully dressed except for the parts you want most dressed up would probably still pass through. And because it's based only on flesh, otherwise innocent beach photos could end up getting flagged. An even bigger hiccup? Because the algorithm relies on skin tone recognition, it is powerless against black and white images.

There's also the broader issue of its limited reach, even when it works perfectly. There's a whole universe of potentially offensive image-based content that has nothing to do with skin, says Joshua Buxbaum, co-founder of WebPurifier, a content moderation service. “Violence,” Buxbaum gives the example, “is complicated. We even get details as detailed as possible. » violence in a sport that is not a violent sport it's violence, but if it's boxing, who is a violent sport, we allow it. This is just a start; According to Buxbaum, a truly comprehensive image moderation suite should potentially correct everything from hate crimes to drug paraphernalia to crude gestures, depending on the needs of the client, and address the gray areas within each of them. these categories. Algorithmia's solution only looks for naked people and, even then, it still has the daunting task of determining whether they are lewd or not. Buxbaum proposes that the simplest algorithm could filter something for profanity, but it would struggle to identify hate speech or bullying — meaning a nudity detection algorithm could find flesh , but would have difficulty distinguishing whether it is revenge porn or works of art.

This does not, however, make its nudity detection algorithm useless; it's just a blunt instrument rather than a prudish laser-guided missile. Daniel also expects these types of issues to be resolved over time, especially if there is clear business interest. “We'll continue to improve it,” he says, “or if a user of our system wants to come in and try to create a more sophisticated nudity detection algorithm, they can do that too. » If one of Algorithmia's partners managed to crack a better code, they would be paid according to a model borrowed directly from the Apple App Store; receive a fee on each algorithm call, with Algorithmia taking 30% of the sum.